Emotional Clicktivism: Facebook Reactions and affective responses to visuals

Team Members

Anna Scuttari, Nathan Stolero, Arran Ridley, Livia Teernstra, Denise van de Wetering, Michele Invernizzi, Marloes GeboersContents

Summary of Key Findings

Reactions usage shows a strong correlation between Facebook's Sad and Angry buttons, whereas the Love and Haha buttons go together but are more distinct. The difference between the strong correlation of the negative emotions and the weaker correlation of the positive emotions might suggest that users were more nuanced in their experiences of positive emotions, compared to their experiences between the negative emotions in the case of the Syrian revolution. New emotions are then appearing that are constituted both by Sad and Angry buttons use. Complex emotions like frustration might be apparent only in combining both Sad and Angry buttons. This is also apparent in captivation which emerges out of the combination of Love and Wow.

When looking into images that go with the Love button, we clearly see how this is used as a way of communicating pity and solidarity. Images attracted to the Haha button include re-appropriated images that are often used to mock politicians. .

Zooming in on the Reactions spikes in an over time bumpchart of Reactions usage, interesting spikes are the ‘sad only’ spikes and the ‘love-only’ spikes (one big, one smaller for the latter). We found connections between those spikes and what is actually happening in the conflict. When checking we could connect the three sad spikes to consecutively two exceptionally violent weeks in Aleppo (end of April 2016 and first half of December 2016) and the third one (albeit smaller and lasting less long) connects to the chemical attack in the Idlib region in 2017. The love-only spikes of August 2016 and end of October 2016 correspond with big and smaller victories of the rebels, for example ceasefires (end of October) and taking back territory in August 2016.

Figure 1 Bumpchart of Reactions usage over time

These findings are also reflected when zooming in on the tags retrieved via the Vision API. Top engaged with images in he love/haha correlated section go together with predominantly images including celebrating crowds and some sort of glorifying of military (albeit ‘rebels’) symbols (think about tanks and a fighter’s foot on a statue of Assad). Soldier was present in the top 3 most frequent tags: 5,979 images were tagged this way. There was a significant difference between the amount of comments, reactions and engagement to images tagged with soldier compared to images without that tag (see findings section for stats). More positive emotions were related to images of a soldier. This gives the overall sentiment for the community of the page to be passionate about the revolution, as a (rebel) soldier is a clear symbol of this. Sad/Angry buttons that are severely correlated (0.733) go with tags that represent the bodily features to be seen in the images (tags such as nose, skin etc.), often images depicting dead bodies, where deceased children are dominant (see findings of week 1 and 2). The other top frequent tags were vehicle and protest. The tag vehicle is associated with significant positive Reactions engagement, similar to the soldier tag, however, less comment activity can be seen. The tag protest showed a lack of emotional responses through the use of Reactions, it is hypothesized that this has to do with the inanimate character of the images that were tagged this way: often these were unidentifiable crowds in protests.

1. Introduction

In an earlier account of the workings of the like economy, Gerlitz and Helmond (2013) show how Facebook enables particular forms of social engagement and affective responses through its protocol, collapsing the social with the traceable and marketable and filtering it for positive affects. This project aims to build on this in providing insight into how Reactions buttons, implemented in 2016, relate to this filtering of content that evokes certain emotional reactions.

The introduction of Facebook’s Reactions in 2016 is exemplary for how a platform affords for emotional engagement with content. While still refraining from implementing the Dislike button, which cannot be collapsed in the composite Like counter (Gerlitz and Helmond, 2013), Facebook decided to add buttons, including buttons that portray negativity, mainly angry and sad. This project zooms in on the use of these (negative) buttons in the Syrian news space containing public Facebook pages.

In the commercial endeavour of Facebook, Gerlitz and Helmond (2013) argue, it is only the traceable social that matters to Facebook, as the still intensive, non-measurable, non-visible social is of no actual value for the company: it can neither enter data mining processes nor be scaled up further. Following Gerlitz and Helmond, we should understand Reactions as an attempt to further ‘metrify’ a part of what might have been conceived as non-measurable: emotions evoked in users.

Social buttons both pre-structure and enable the possibilities of expressing affective responses to or engaging with web content, while at the same time measuring and aggregating them (Gerlitz and Helmond, 2013). With implementing the Reactions buttons Facebook is adding several layers to the original like button, extending the ways in which content on Facebook can be qualified in an affective way. The question arises whether the collective use of Reactions, can be 'repurposed' for pinpointng certain publics within a page and for understanding the visual content they engage with.

2. Initial Data Sets

Facebook Syrian Revolution Network https://www.facebook.com/Syrian.Revolution/ with 1.937.954 followers was scraped using Netvizz, scraping a time span beginning just before the implementation of Reactions (16 February 2016 - 27 June 2017). Implementation was February 24 2016.

For scraping we used the page data module, scraping for full stats and posts by page only. See also methodology.

In week 2 we went across pages, working with several datasets, all derived via Netvizz.

3. Research Questions

How are Reactions used collectively? How do visual characteristics associate with the use of Reactions buttons?4. Methodology

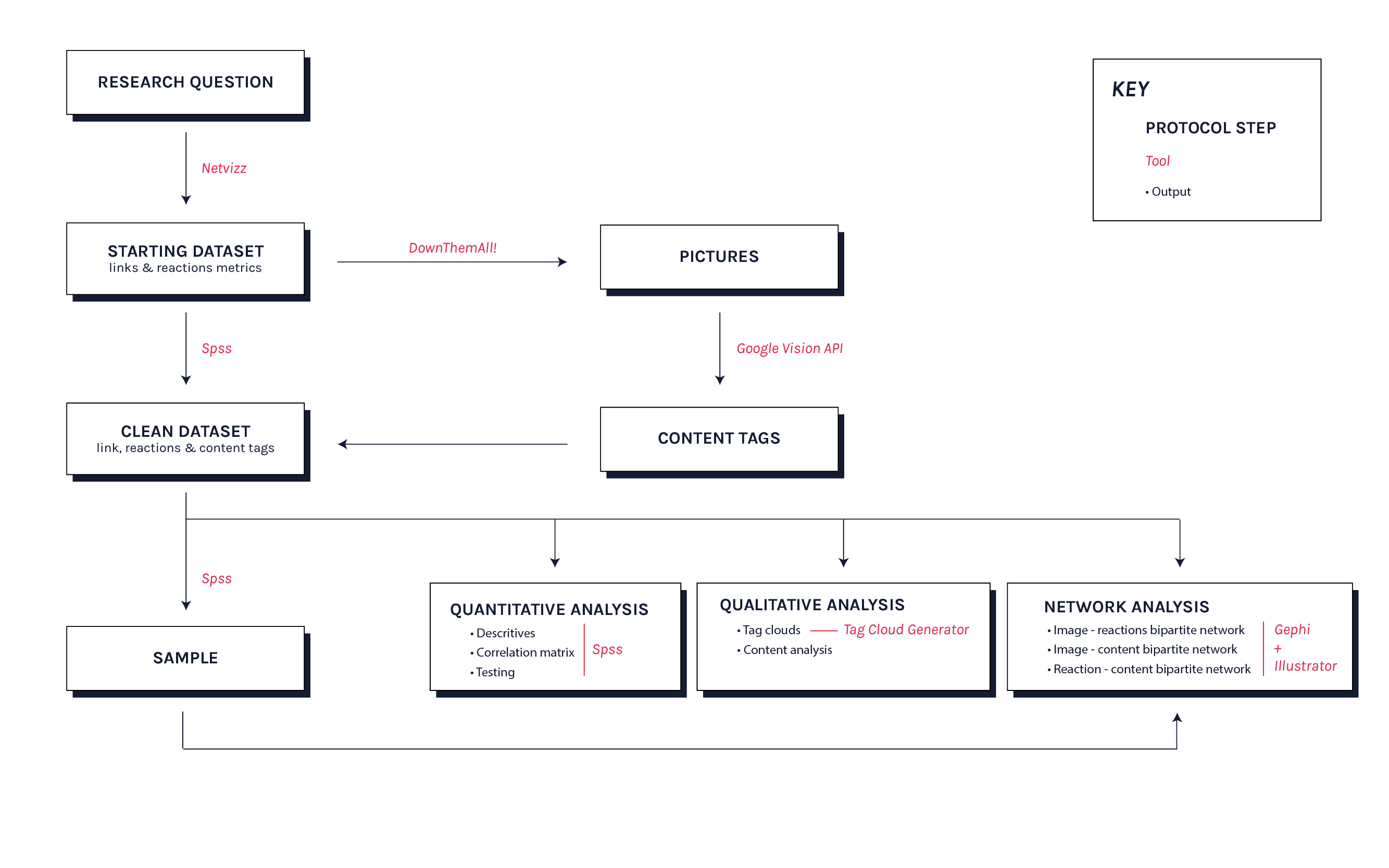

Chart A (below) depicts our research flow. In general, the following steps, as will be elaborated below, were:

Research Question

Starting Dataset: Scrape all the posts using Netvizz and create a starting datasets

Pictures: Downloading all the images using “DownThemAll!”Content Tags:

Using the Google Vision API tool to generate the pictures content tags.

Creating a clean dataset: Using statistics measures to create a clean dataset combined with the tagged photos from the Google Vision API tool, with links and reactions.Sampling: Creating a representative sample from the dataset.

Analyses: Performing quantitative analysis (explorative visual timeline of the different reactions, word cloud, descriptive, correlation matrix and testing), qualitative content analysis and network analyses (using Gephi and Illustrator).

Figure 3 Methods diagram

Figure 3 Methods diagram

Creating the Starting Dataset: Data Scraping using Netvizz

We used Netvizz to scrape Data from February 16th, 2016, to June 27th, 2017. We started a couple of days before Facebook officially launched the reactions option. Our data ended on the day we started running the scraping process. Therefore, we scraped all the data that is available since Facebook launched the Reactions feature.We scraped for full data of posts published by page only. At first we scraped in 3 months timeslots, but this method encountered many problems (slowing the scraping process, token revoked in the middle, incorrect data), so the missing and defective data were scraped in shorter one-month timeslots.

Tagging the Pictures using Google API Vision (MemeSpector)All files were analyzed using the Google Vision API tool, in order to retrieve the different tags that are associated with all the pictures. Each of the scraped files from Netvizz was run separately in the Google Vision API tool.

In order to test the validity of the Google Vision API tool in our analysis, we used a convergent validity with a manual coding of a small proportion of the dataset. Using the function ‘=RAND()’ a column of random numbers was attributed to each row. This was then sorted using A-Z which randomised the dataset. The first 20 were taken as a sample. Within this sample a qualitative reading of the images was undertaken comparing the tags to the actual contents of the image. The samples contained 154 tags. Of these tags 24 tags were deemed to be inappropriate/ill-fitting/incorrect. This gives the tool convergent validity score of 0.77.

Filtering: Creating a Clean Dataset

After all the scraped files were analyzed, we merged them together, cleaned the files and made certain adjustments (e.g. re-aligning columns that the Google Vision API script moved). The final dataset included 10,339 posts from different types (e.g. also status, link and video). For the analysis, we have filtered the file to include photo-posts only, removed from the analysis the time slot of October-December, 2016, because of defective data, removed missing rows and reached a final sample of 6,409 cases. We tested for distribution of normality for each reaction and for the total number of reactions, using the Shapiro-Wilk test. The test shows that the dataset was not normally distributed (p < .001), and hence we should be wary of using tests assuming linear relationships. Sampling While the statistical analysis included all the dataset, the dataset we used for the network analysis was a sample. This sample was drawn based on a calculated sample size of 95% confidence level of the total 6,409 posts, which resulted in N = 363. To test if the sample was representative for the full dataset, we drew a one sample t-test based on the variable engagement. This variable reflects the total amount of clicks, shares and reactions to a post and is therefore a perfect summary of the different variables to base a comparison on. The t-test showed that the sample mean (M = 2526.16, SD = 2686.06) did not significantly differ (t = 0.675, p = .500) from the full dataset (M = 2631.72, SD = 2905.34). This means that the sample was assumed to be representative for the whole dataset and therefore could be used in the analysis, even though we noted that our data was not normally distributed. The absence of normality did not cause any problems in comparing means because t-tests are generally robust against violations of normality.The Quantitative Analysis

Visualizing the timeline

Using Raw Graphs we created a visualization of the timeline of all the posts in the page, divided by the different reactions.

Word Cloud of Vision API Tags

Based on the tags that were generated from the Google Vision API tool, we have created a word cloud (http://www.jasondavies.com/wordcloud/) measuring the frequency of words. We filtered the following ‘irrelevant’ words: text, font, product, advertising, tourism, adventure, geological, phenomena and brand (discussed further in ‘Findings’ section). We had ascertained that these images were wrongful classification of the images; for instance, there were no images relating to tourism or adventure. Moreover, many images contained text (and hence was classified as such) but we were not interested in the text of the image but rather the visual content.

Statistical Procedures

The data was analysed using IBM SPSS v24 and Stata v13. We used pearson correlation, t-test, and cluster analysis in order to understand the different correlations between reactions themselves and between reactions and other engagement factors (e.g. likes, shares), between reactions of the Google Vision API tags, how the different reactions cluster together and the differences between the different reactions.

The Qualitative Analysis

Image Analysis We used “DownThemAll!” to batch-download all the photos from the sample file (N=363). We used Imagesorter to initially “eyeball’ the images. We employed two methods of sorting the image. The first method was choose a specific time slot and the other was using the whole sample. In both cases, similar patterns were presented. More in the Findings section and Findings section of week 2. The Network Analysis The sample pictures were then imported into Gephi (after refining the file in Open Refine), building the different nodes and edges. In total, nine networks were created, representing the image-reactions bipartite network, image-content (vision api tags) bipartite network and reaction-content (idem) bipartite network. We used Illustrator to amend the visualization, replacing text tags with image tags. Making the networks (connected to 2,3, and 4)This protocol is for creating a network of connections between images and the reactions that they generated on Facebook.

Tools used: Netvizz, Google sheet, OpenRefine, Downthemall, Gephi, BBedit, Illustrator

Steps taken:

Phase 01:

-

Open the full data .tab file in Google Sheet;

-

Keep only photos, filter out rows with errors.

Phase 02: Get the images

-

Copy the full_picture column to a new file and save it as image-list.txt;

-

Open dTa! Manager(part of DownThemAll!) and load the .txt file (Import from file -> make sure the format in the options is set to text files -> Open);

-

Select all the images -> Select a folder to place them -> in the mask input box type flatcurl (this will rename the images with part of their url);

-

Download all the images.

Phase 03: Prepare the final dataset

For Gephi to work we would need two separate datasets: a nodes table and an edges table. In order to do that we can use a few Google Sheet functions to make these tables starting from the Netvizz data.

-

Nodes table (using these header names will save a lot of pain later)

id

type

amount

image 01

image

n° of total reactions

reaction 01

reaction

n° of times reaction 01 has been used

Image 02

image

n° of total reactions

-

Edges table (using these header names will save a lot of pain later)

source

target

weight

image 01

reaction 01

n° of times reaction 01 has been used for image 01

image 01

reaction 02

n° of times reaction 02 has been used for image 01

image 01

reaction 03

n° of times reaction 03 has been used for image 01

image 02

reaction 01

n° of times reaction 01 has been used for image 02

Phase 04: Create the network

-

-

Import datasets on Gephi;

-

Spatialize the graph;

-

Export as svg (it’s important to also export the labels).

Phase 05: Make the final svg

-

Open the svg in any text editor that has the Find and Replace function;

-

Remove all the <circle> tag for the images, remove all the <text> tag for the reactions;

-

Replace the <text> tag for the images with an <image> tag, that will have as attributes: the same x and y attributes of the text tag, an xlink:href attribute with the name of the image, a width and height attribute (1% each and you can always change this value later if the images get too small);

-

Save the svg and put it in the same folder where all the images are;

-

Open the svg with Illustrator and make the final touches.

5. Findings

Descriptive Statistics: Between Sadness and Angriness to Love

Tables 1 and 2 show the descriptive statistics of the final dataset. It shows that the most used reaction was Like (N = 1,252,620; M = 1959.59; SD = 2099.218). Among the emotional reactions, the most popular was Sad (N = 377,436; M = 59.05; SD = 157.974) and then Angry (N = 140,285; M = 21.95; SD = 59.428), Love (N = 139,632; M =21.84; SD = 44.39), Wow (N = 54,150; M = 8.47; SD = 43.043) and HaHa (N = 8694; M = 1.36; SD = 3.54). Therefore, negative reactions were more popular in general than positive reactions. We have later decided to omit the like reaction from the analysis in order not to skew the data.

Table 1. Descriptive Statistics of Reactions, Shares, Comments and Engagement.

| Reactions | Shares | Comments | Engagement | |

| Mean | 2,386.21 | 145.23 | 94.31 | 2,625.74 |

| Std. Dev | 2,539.225 | 394.039 | 153.166 | 2,893.267 |

| Min | 110 | 0 | 0 | 115 |

| Max | 33,290 | 14,683 | 2,275 | 41,153 |

| Frequency | 15,293,190 | 930,785 | 604,404 | 16,828,379 |

Table 2. Descriptive Statistics of the different reactions.

| Like | Love | Wow | Haha | Sad | Angry | |

| Mean | 1959.58 | 21.84 | 1.36 | 8.47 | 59.05 | 21.95 |

| Std. Dev | 2099.218 | 44.390 | 3.540 | 43.043 | 157.974 | 59.428 |

| Min | 92 | 0 | 0 | 0 | 0 | 0 |

| Max | 28,868 | 1,017 | 145 | 1,923 | 2,621 | 914 |

| Frequency | 12,525,629 | 139,632 | 8,694 | 54,150 | 377,436 | 140,285 |

How were Emotions Correlated?

In order to understand how the different reactions correlate, we performed a Pearson correlation between the five different reactions. Table 3 shows the correlations between the different reactions.

Table 3. Pearson correlations between Love, Haha, Wow, Angry and Sad.

| Love | Wow | Haha | Sad | Angry | |

| Love | 1 | ||||

| Wow | .412** | 1 | |||

| Haha | .171** | .290** | 1 | ||

| Sad | .045** | .102** | -.056** | 1 | |

| Angry | -.103** | .139** | -,014 | .733** | 1 |

**. Correlation is significant at the 0.01 level (2-tailed).

Table 3 shows that the negative emotion reactions (sad and angry) were highly correlated (r = .733, p < .001). This very high correlation shows that these reactions were highly used together. On the contrary, the positive reactions of Haha and Love produced a very weak but signifiant positive correlation (r = .171, p < .001). The difference between the strong correlation of the negative emotions and the weaker correlation of the positive emotions might suggest that users were more nuanced in their experiences of positive emotions, compared to their experiences between the negative emotions in the case of the Syrian revolution. Sad and Love reactions had a negligible correlation between them (e=.045, p < .001), which might indicate a sense of pity, expressed by the combination of these reactions. The somewhat more neutral Wow reaction button was stronger correlated with the positive emotions, Love (r =.412, p <.001) and Haha (r =.29; p <.001), compared to the negative emotions, Angry (r = .139; p < .001) and Sad (r = .102, p < .001). Cluster analysis also shows three distinct clusters which generally coincide with the correlations discovered with Pearson’s R. One cluster showed clear groupings of sadness and anger, another cluster showing love and haha, and a final cluster that consisted of a mixture of all responses..gif)

Figure 5 is a network that visualizes the correlations between emotions (Reactions)

The link between emotions and image tags

When examining datasets of sensitive images, the Vision API is a useful tool to quickly classify several elements present in an image. We double checked a sample of the images to control for classification accuracy. Then we removed image tags that were clearly misclassified, or not relevant to the analysis. These words were: text, font, product, advertising, tourism, adventure, geological, phenomena and brand.

Although the classification is largely objective (e.g. tree, vehicle, soldier etc), there were some nuances in the images that the API was able to detect, such as ‘fun’, depicted in Figure 1. For brevity, we only examined the top 10 tags post-cleanup, which were: recreation, vehicle, protest, event, crowd, mode of transport, soldier, green, demonstration, tree.

Figure 6. An example image tagged with ‘fun’

We went on to analyze 3 of the top 10 image tags, each with distinct characteristics:

-

Soldier (personal, individual)

-

Protest (impersonal)

-

Vehicle (an inanimate object)

Reactions to images tagged with ‘Soldier’

In the total dataset, there were 5,979 images tagged with ‘soldier’. There was a significant difference between the amount of comments (t(434) = -8.44, p < .001), reactions (t(450) = -9.24, p < .001) and engagement (t(453) = -8.95, p < .001) to images tagged with soldier compared to images without that tag. For succinctness, the exact means and standard deviations can be found in Table 4. Overall, images depicting a soldier elicited significantly more comments, reactions and engagement than images that did not.

Further examination of the reactions yielded interesting results. There were significant differences between all reactions. There were more reactions of Love (t(453) = -8.02, p < .001), Wow (t(453) = -7.77, p < .001) and Haha (t(426) = -6.22, p < .001) to images tagged with soldier. Moreover, there were significantly less reactions of anger (t(548) = 5.01, p < .001) and sadness (t(874) = 10.20 , p < .001) to these posts. Once more, mean differences can be seen in Table 4. Therefore, there are more positive emotions related to images of a soldier. This gives the overall sentiment for community of the page to be passionate about the revolution, as a soldier is a clear symbol of this. Reactions to images tagged with ‘Protest’There were 5,729 images tagged with protest in the dataset. Contrastingly to the soldier images, those tagged with protest received significantly less comments (t(1942) = 20.8, p < .001), reactions (t(1016) = 10.11, p < .001), shares (t(1947) = 9.6, p < .001) and engagement (t(1040) = 10.76, p < .001). The trend continued when examining the reactions to the images, as there was significantly less Love (t(1190) = 3.43, p < .001), Wow (t(2040) = 11.98, p < .001), Haha (t(3106) = 9.06, p < .001), Sad (t(4449) = 20.27, p < .001) and Angry (t(6310) = 24.55, p < .001) reactions. The means can be observed in Table 4. The lack of emotionality and overall engagement relating to protest images are thought to be due to the images being impersonal and not identifiable to the community of the page.

Reactions to images tagged with ‘Vehicle’Of the 5730 images tagged with vehicle, tanks were most commonly depicted. Overall, there were more significantly more reactions (t(801) = -3.91, p < .001) and engagement (t(808) = -3.28, p < .001) shown with images with the vehicle tag. The reaction metrics indicate that there was significantly more Love (t(809) =-2, p = 0.04) and Wow (t(709) = -2.2, p = 0.03) reactions to these images, and significantly less Haha (t(1468) = 2.95, p = 0.01), Sad (t(1141) = 3.84, p < .001) and Angry (t(1072) = 3.56, p < .001). Similar to those images tagged with ‘soldier’, it appears that vehicles can elicit a positive emotional response, evidenced in the significant amount of reactions and engagement. Thus, people were engaged with and reacted to the content, despite not having much to say about it. We posit that tanks are perhaps a symbol of the revolution, along with soldiers.

Table 4. T-tests: reactions and engagement for 3 of the most popular image tags| Soldier | Protest | Vehicle | ||||

| Present | Absent | Present | Absent | Present | Absent | |

| Comments | M = 185.76 SD = 232.8 | M = 88.01 SD = 144 | M = 35 SD = 61.5 | M = 101.15 SD = 159 | Not Significant | |

| t(434) = -8.44, p = 0.00 | t(1942) = 20.8, p = 0.00 | |||||

| Reactions | M = 3711 SD = 3048 | M = 2295 SD = 2475 | M = 1683 SD = 1792 | M = 2467 SD = 2600 | M = 2792 SD = 2902 | M = 2338 SD = 2489 |

| t(450) = -9.24, p = 0.00 | t(1016) = 10.11, p = 0.00 | t(801) = -3.91, p = 0.00 | ||||

| Shares | Not Significant | M = 74.41 SD = 159.1 | M = 153.4 SD = 412 | Not Significant | ||

| t(1947) = 9.6, p = 0.00 | ||||||

| Engagement | M = 4041 SD = 3354 | M = 2528 SD = 2833 | M = 1793 SD = 1981 | M = 2722 SD = 2966 | M = 3005 SD = 3227 | M = 2580 SD = 2848 |

| t(453) = -8.95, p = 0.00 | t(1040) = 10.76, p = 0.00 | t(808) = -3.28, p = 0.00 | ||||

| Love | M = 41.40 SD = 51.72 | M = 20.49 SD = 43.52 | M = 16.25 SD = 26.41 | M = 22.49 SD = 45.98 | M = 25.38 SD = 49.06 | M = 21.43 SD = 43.79 |

| t(453) = -8.02, p = 0.00 | t(1190) = 3.43, p = 0.00 | t(809) =-2, p = 0.04 | ||||

| Wow | M = 2.87 SD = 4.12 | M = 1.26 SD = 3.47 | M = 0.58 SD = 1.39 | M = 1.45 SD = 3.70 | M = 1.86 SD = 6.6 | M = 1.3 SD = 2.97 |

| t(453) = -7.77, p =0.00 | t(2040) = 11.98, p = 0.00 | t(709) = -2.2, p = 0.03 | ||||

| Haha | M = 31.26 SD = 78.90 | M = 6.90 SD = 38.91 | M = 2.12 SD = 13 | M = 9.21 SD = 45.19 | M = 5.75 SD = 21.96 | M = 8.79 SD = 44.88 |

| t(426) = -6.22, p = 0.00 | t(3106) = 9.06, p = 0.00 | t(1468) = 2.95, p = 0.01 | ||||

| Sad | M = 23.59 SD = 62.34 | M = 61.50 SD = 162.23 | M = 11.41 SD = 37.29 | M = 64.56 SD = 165.5 | M = 43.75 SD = 101.55 | M = 60.86 SD = 163.28 |

| t(874) = 10.20 , p = 0.00 | t(4449) = 20.27, p = 0.00 | t(1141) = 3.84, p = 0.00 | ||||

| Angry | M = 11.93 SD = 40.39 | M = 22.64 SD = 60.46 | M = 2.44 SD = 8.48 | M = 24.2 SD = 62.31 | M = 16.32 SD = 40.96 | M = 22.61 SD = 61.22 |

| t(548) = 5.01, p = 0.00 | t(6310) = 24.55, p = 0.00 | t(1072) = 3.56, p = 0.00 | ||||

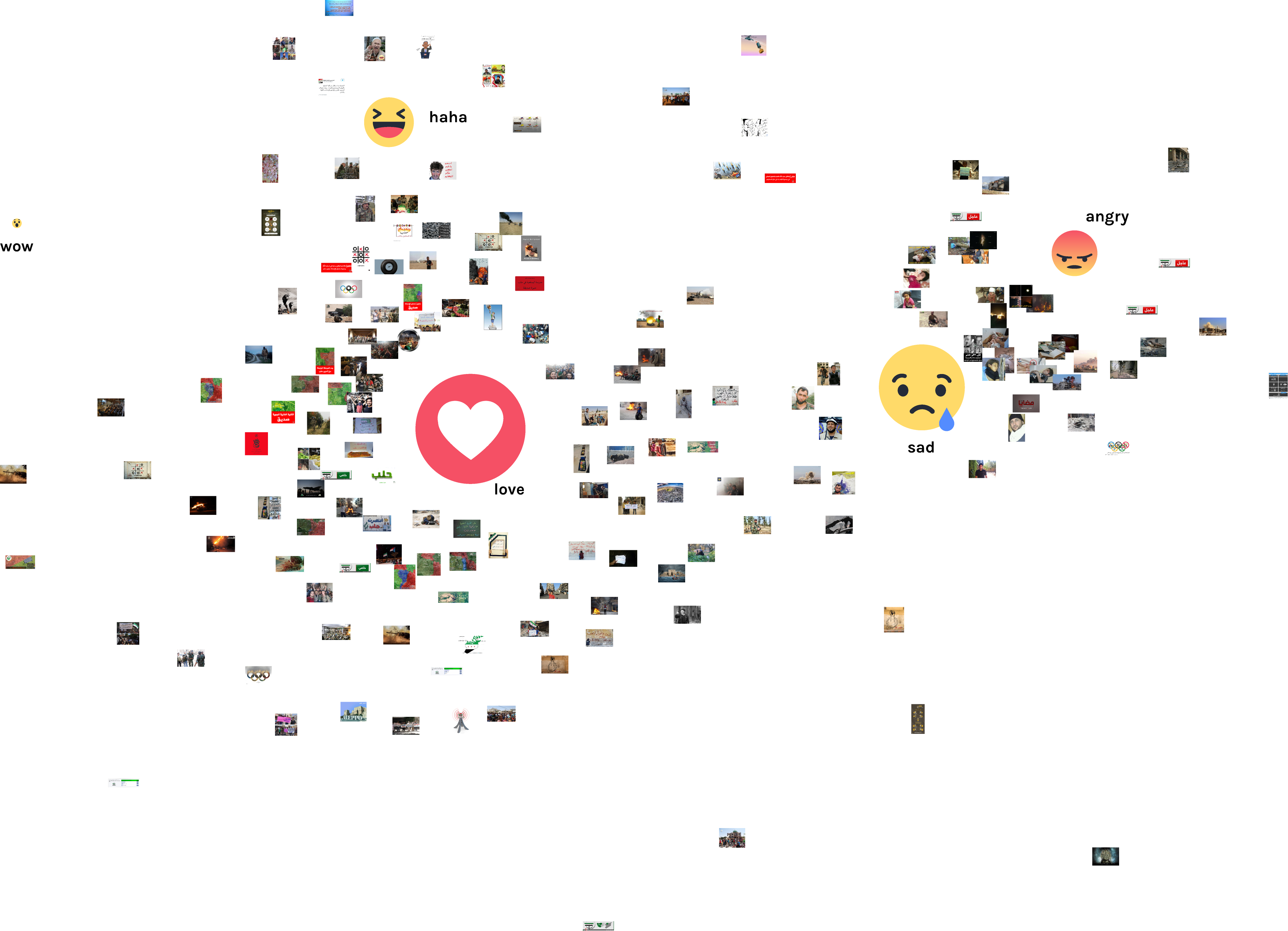

From there, we examined the images that were most linked to the correlated emotions of love and haha. These images were predominantly tagged as crowd/military (militia and event as synonyms), representing the celebrations depicted by the rebel parties.

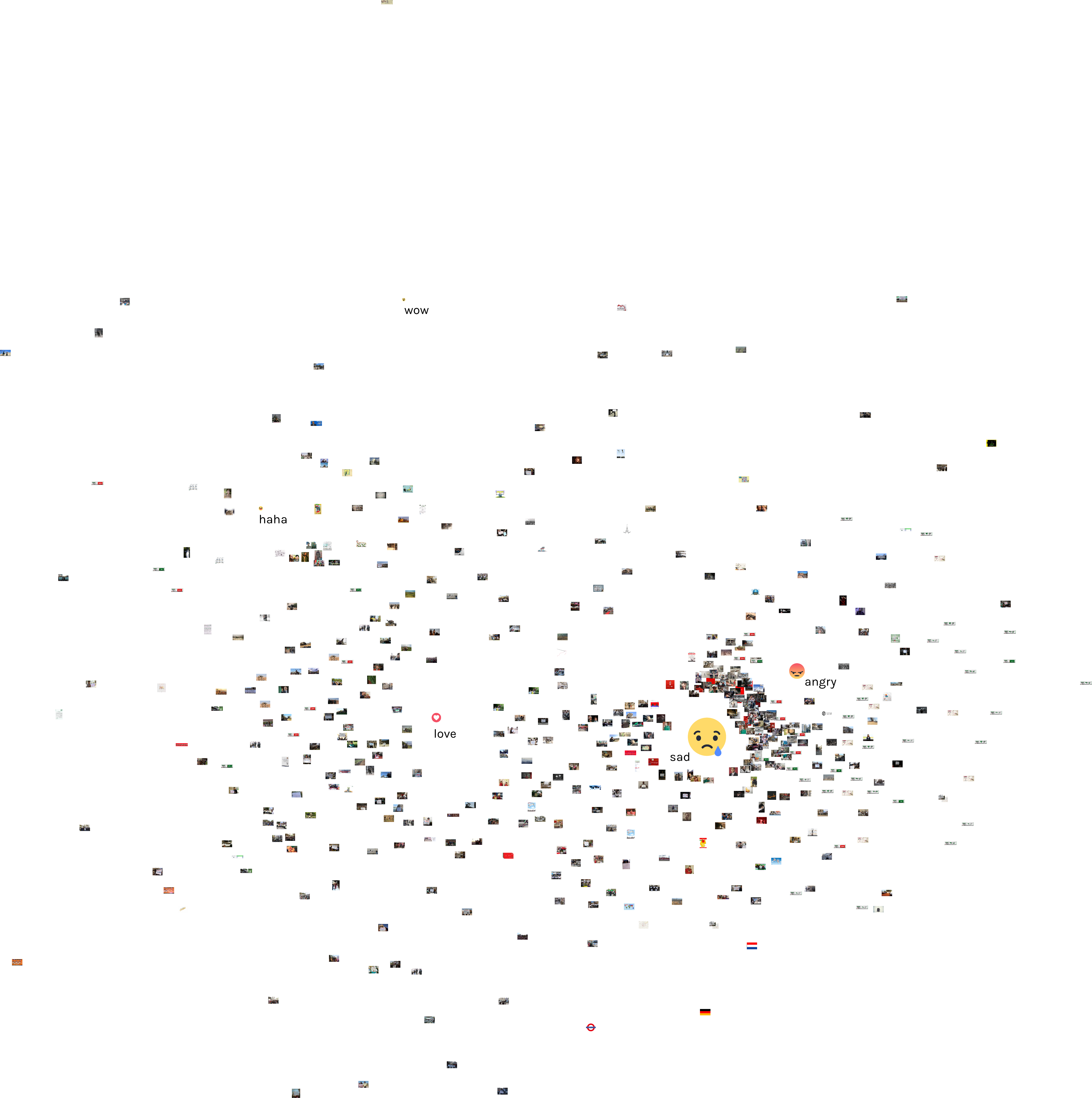

In the top ten tags linked to sad / angry images, the tags were describing the actual content which was (apart from one, see Figure 1) predominantly consisting of dead people, often children. The assigned tags were descriptions like: nose, skin, facial etc.

Figure 7. The image most frequently linked to a mixture of sad and angry emotions

Network analyses of Reactions and Images, Vision api tags and Images and Tags and Reactions

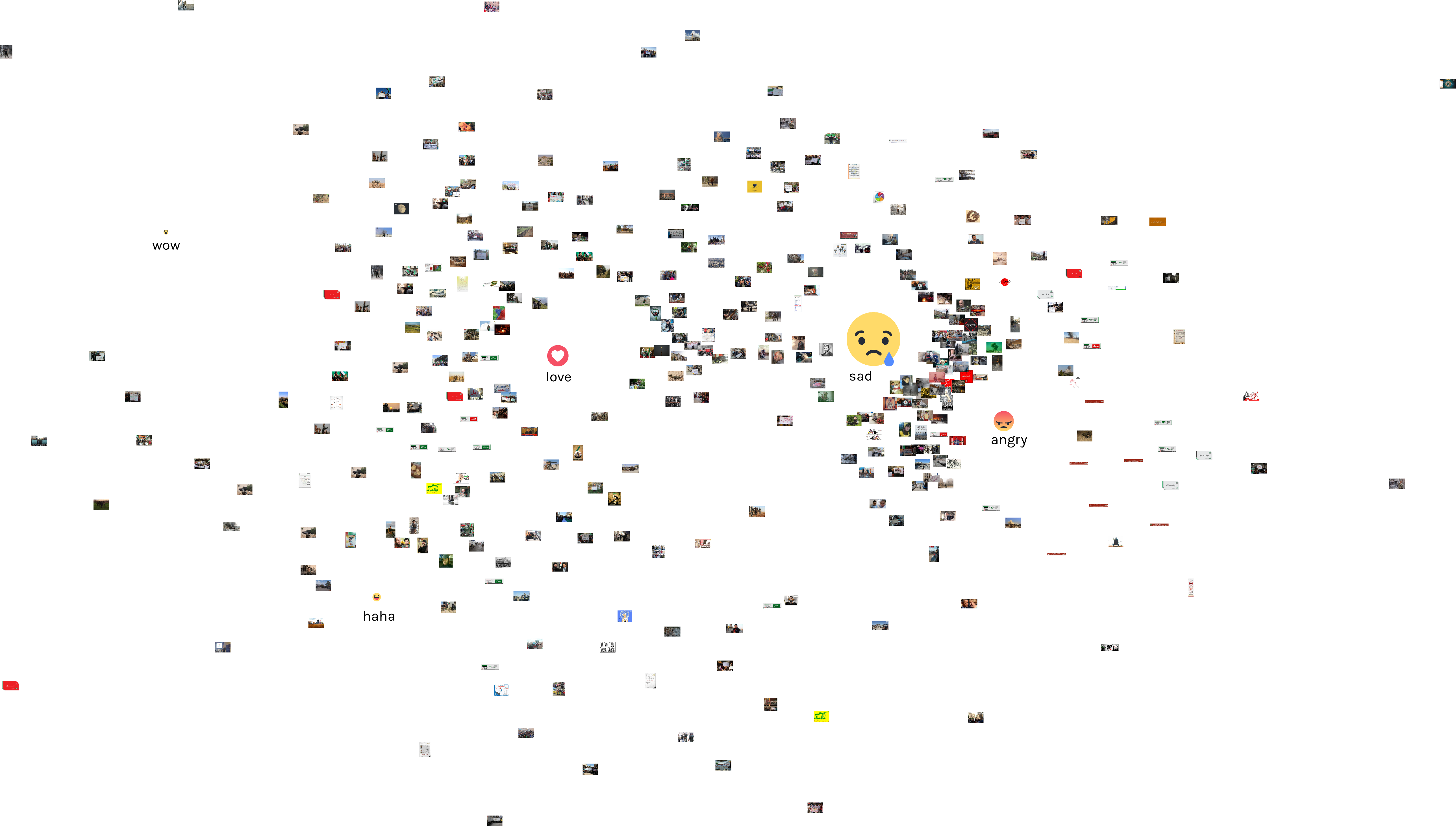

Figure 8 shows the network of images-Reactions for the respesentative sample.

Figure 9 shows the network of Images-Reactions for the Love-only usage spike (Love Explosion)

Figure 10 shows the network of Images-Reactions for the Sad-only usage spike (Sad Beats)

Plotting the images using ImagePlot and ImageSorter

The sample was plotted over time and by colour which gave some interesting clues about duplicates, which were partly also outliers in, for example, the angry space (see bipartite network image-reactions in the sad beats love spike).Text based images were spread across the Reactions space, see bipartite network of sample, obviously suggesting a strong role for the nature of the text in evoking certain emotions. These emotion specific outliers and duplicates are input for further research.

F igure 11 shows the scraped images found within the sample set of 363, duplicates can be seen to be grouped together using the 'group by color' option in ImageSorter.

F igure 11 shows the scraped images found within the sample set of 363, duplicates can be seen to be grouped together using the 'group by color' option in ImageSorter.6. Discussion

Google's Vision API can be a useful tool when doing image analysis. The way it tags the images is very objective, and often the classificaiton can be wrong, especially in the case of the Syrian Revolution Facebook page, where some images were tagged as 'adventure' and 'tourism'. However, when trying to quantify the images with the reactions, it helps to clean the Vision API tags before drawing conclusions. If the intention is simply to examine whether or not the presence of a certain visual element can elicit different emotional reactions, statistical analysis in combination with the Vision API is a useful tool to generate these primary conclusions. For instance, it was an interesting finding that the presence of a soldier would elicit positive emotional responses such as Love and Haha, indiciating that this audience sees soldiers as a positive symbol of the revolution. Moreover, a similar postive response was found for images tagged with 'vehicle', which upon examination usually referred to tanks. Therefore not only were soldiers (identifiable individuals) seen as a positive symbol, but also tanks, which lead us to believe that tanks may also be a symbol of the revolution. Contrastingly, unidentifiable crowds for images tagged with protest had significantly less emotional responses across the board, both positive and negative. Therefore, protests are not a good image to elicit emotions from the audience on the Syrian Revolution Facebook page.

Future research should look further into the role of text and the use of reactions. In some cases visual duplicates clearly show different reactions metrics. Zooming in on these duplicates and their different accompanying texts - sometimes this is the text in the image, sometimes this refers to post texts and headers - provides for a more refined understanding of the use of Reactions buttons.Further research should delve into the different spaces on Facebook to see whether our findings hold up. In the Syrian Revolution page, the sad and angry buttons correlate so strongly, however as other spaces depict different contents, textual and visual, the use of Reactions buttons might reveal different correlations or more distinct uses of buttons.

!!!NOTE: the latter has been done in week 2 of the summer school, see project pages week 2 Emotional Clicktivism.

7. Conclusions

Although Facebook is clearly evolving away from the ‘happy’ positive only platform in the actual content, the platform’s button affordances do not fit all actual emotions experienced in users. Reactions is a way for a more fine grained understanding of the affective responses to news content, however, collectively, users reappropriate the buttons in such a way that new emotions are emerging out of the collective combination of, mainly, the sad and angry buttons. They seem to point to an emotion that can be perceived as frustration. The same happens in the posts where both love and wow are weakly positively correlated, which we assume the image arouses feelings of captivation or enthrallment.However, the use of these buttons does reflect recent notions of the structural classification of emotions that reconcile categorical and dimensional theories. In categorical theories (Hosany and Gilbert 2010, p. 35) it is assumed that emotions are a limited number of discrete entities (Izard, 1977) and these types of emotions can only be experienced separately. This perspective was criticized, arguing that more than one emotion can be experienced at the same time (Lee et al. 2007). Dimensional theories enter the stage; following these emotions should be understood as subjective experiences and global feelings. Emotions are therefore not classifiable into a limited number of affective states, but rather an infinite range of emotional states evaluated according to a multidimensional perspective.

The hierarchical theory of emotions (Laros and Steenkamp, 2005) introduce a recent perspective in which there is a superordinate level of emotion (positive vs. negative affect), a basic emotion level (four basic positive and four negative emotional states), and finally a subordinate level (42 classified emotions). The combining of sad and angry buttons and the more distinct usage of positive buttons seem to point in the direction of the hierarchical perspective.

Furthermore, looking into the associations between image characteristics and the Reactions that go with them, we can see what 'triggers' the community of a page emotionally. This can provide for more insight in what constitutes emotional impact or affect of images within and outside certain communities. In week 2 of this summer school we therefore chose to apply the methodology on pages that are followed by different communities (Women's March and Black Lives Matter) and we chose to look into news pages for which we hypothesized that these were followed by a more hetergeneous public. The question is then, whether this more diverse public shows a different use of Reactions.

8. References

Berger, J., Milkman, K. (2012). What makes online content viral? Journal of Marketing Research, 192-205

Burgess, J., Green, J., (2009). YouTube and participatory culture. Cambridge: Polity Press

Couldry, N., Hepp, A. (2017). The mediatized construction of Reality. Cambridge: Polity Press

Gerlitz, C., Helmond, A. (2013). The Like Economy: social buttons and the data-intensive web. New Media and Society

Highfield, T., Leaver, T. (2016). Instagrammatics and digital methods: studying visual social media, from selfies and GIFs to memes and emoji. Communication Research and Practice, 2(1).

John, N. (2017). The age of Sharing. Cambridge: Polity Press

Latour, B. (2007). Reassembling the Social: An Introduction to Actor-Network-Theory. New York: Oxford University Press

Osofsky, J. (2016). Information about trending topics. Facebook Newsroom. https://newsroom.

fb.com/news/2016/05/information-about-trending-topics/

Rogers, R. (2013). Digital methods. Cambridge, Massachusetts; London: The MIT Press.

Stieglitz,S., Dang-Xuan, L. (2013). Emotions and Information Diffusion in Social Media - Sentiment of Microblogs

and Sharing Behavior. Journal of Management Information Systems, 29:4, pp. 217–247.

Tian, Y., Galery, T., Dulcinati, G., Molimpakis, E., Sun, C. (2017) Proceedings of the Fifth International Workshop on Natural Language Processing for Social Media, Valencia, Spain, April 3-7, 2017

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

01_SampleSet.png | manage | 1 MB | 07 Jul 2017 - 11:52 | MarloesGeboers | |

| |

01_love-explosion.png | manage | 1 MB | 07 Jul 2017 - 11:52 | MarloesGeboers | |

| |

01_sadBeatsLove.png | manage | 1 MB | 07 Jul 2017 - 11:52 | MarloesGeboers | |

| |

Flowchart-01.png | manage | 87 K | 07 Jul 2017 - 10:58 | MarloesGeboers | |

| |

Presentation_week1.pdf | manage | 12 MB | 12 Jul 2017 - 19:26 | DeniseVanDeWetering | Presentation of results: week 1 |

| |

bumpchart_overall02.svg | manage | 158 K | 07 Jul 2017 - 10:09 | MarloesGeboers | |

| |

presentation_sample_images.png | manage | 1 MB | 12 Jul 2017 - 17:30 | ArranRidley | |

| |

small_net_gif (1).gif | manage | 307 K | 07 Jul 2017 - 12:08 | MarloesGeboers | |

| |

table4.png | manage | 157 K | 07 Jul 2017 - 11:44 | MarloesGeboers | |

| |

wordcloudclean.svg | manage | 8 K | 07 Jul 2017 - 10:15 | MarloesGeboers |

Topic revision: 09 Aug 2017, MarloesGeboers

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback