You are here: Foswiki>Digitalmethods Web>TheNetworkedContent (06 Jan 2016, ErikBorra)Edit Attach

Networked content

Issues raised by the object of study

To date the approaches to the study of Wikipedia have followed from certain qualities of the online encyclopedia, all of which appear counter-intuitive at first glance. One example is that Wikipedia is authored by "amateurs," yet is surprisingly encyclopedia-like, not only in form but in accuracy (Giles, 2005). The major debate concerning the quality of Wikipedia vis-a-vis Encyclopedia Britannica is also interesting from another angle. How do the mechanisms built into Wikipedia's editing model (such as dispute resolution) ensure quality? Or, perhaps quality is the outcome of Wikipedia's particular type of "networked content," i.e., a term that refers to content 'held together' (dynamically) by human authors and non-human tenders, including bots and alert software. One could argue that the bots keep not just the vandals, but also the experimenters, at bay, those researchers as well as Wikipedia nay-sayers making changes to a Wikipedia entry, or creating a new fictional one, and subsequently waiting for something to happen (Chesney, 2006; Read, 2006; Magnus, 2008). Another example of a counter-intuitive aspect is that the editors, Wikipedians, are unpaid yet committed and highly vigilant. Do particular 'open content' projects have an equivalent community spirit, and non-monetary reward system, to that of open source projects? (See UNU-MERIT's Wikipedia Study.) Related questions concern the editors themselves. Research has been undertaken in reaction to findings that there is only a tiny ratio of editors to users in Web 2.0 platforms. This is otherwise known as the myth of user-generated content (Swartz, 2006). Wikipedia co-founder, Jimbo Wales, has often remarked that the dedicated community is relatively small, at just over 500 members. Beyond the small community there are also editors who do not register with the system. The anonymous editors and the edits they make are the subjects of the Wikiscanner tool, developed by Virgil Griffith studying at the California Institute of Technology. Anonymous editors may be 'outed,' leading to scandals, as collected by Griffith himself. Among the ways proposed to study the use of Wikipedia's data, here the Wikiscanner is repurposed for comparative entry editing analysis. For example, what does a country's edits, or an organization's, say about its knowledge? Put differently, what sorts of accounts may be made from "the places of edits"?"The Places of Edits" and Other Approaches to Study Wikipedia

Wikipedia is an encyclopedia as well as a source for more than content. It is also a compendium of network activities and events, each logged and made available. In some sense it has in-built reflection upon itself, as in the process by which an entry has come into being, something missing from encyclopedias and most other 'processed' or edited works more generally. This process by which an entry matures is one means to study Wikipedia. The materials are largely the revision history of an entry, but also its discussion page, perhaps its dispute history, its lock-downs and reopenings. Another approach to network activity would rely on the edit logs of an entry, where one may view editor names, dates, times and changes. In the study of Wikipedian vigilance, the researcher is interested in how page vandalism is quickly expunged, or not, as in the infamous case of John Seigenthaler' biography entry. Herein lies the vital role assumed by bots, as well as questions concerning the level of dependency of Wikipedia on bots generally, including for each language version. Wikipedia also may be thought of as a collection of sensitive subject matters, where editors (anonymous or otherwise) make careful and subtle changes so as to set the record straight, and regain control of the narrative. (Do the tone of the NPOV [neutral point of view], and the style of an encyclopedia entry, eventually allow for settlement or closure, or does that reside in human-bot relations?) The Wikiscanner has been crucial in making connections between the location of the anonymous editor and the edit. What do the edits say about the management of content? What do the places of edits tell us about entries more generally?Literature

Chesney, Thomas (2006). "An empirical examination of Wikipedia’s credibility," First Monday, 11(11), November, http://firstmonday.org/issues/issue11_11/chesney/. Giles, Jim (2005). "Internet encyclopedias go head to head." Nature. 438:900-901, http://www.nature.com/nature/journal/v438/n7070/full/438900a.html. Magnus, P.D. (2008). "Early response to false claims in Wikipedia," First Monday, 13(9), September, http://www.uic.edu/htbin/cgiwrap/bin/ojs/index.php/fm/article/viewArticle/2115/2027/ Read, Brock (2006). "Can Wikipedia Ever Make the Grade?" Chronicle of Higher Education, 53(10): A31, http://chronicle.com/free/v53/i10/10a03101.htm. Schiff, Stacy (2006). "Know it all. Can Wikipedia conquer expertise?" The New Yorker. 31 July, http://www.newyorker.com/archive/2006/07/31/060731fa_fact/. Swartz, Aaron (2006). "Who writes Wikipedia?" Raw Thoughts blog entry, 4 September, http://www.aaronsw.com/weblog/whowriteswikipedia/.Sample Projects

The Places of Edits

Object

In this project, the wikiscanner is repurposed in order inquire about (anonymous) Wikipedia activity of Dutch universities. For a full post, look here: http://mastersofmedia.hum.uva.nl/2007/10/07/repurposing-the-wikiscanner-comparing-dutch-universities-edits-on-wikipediaApproach

Looking into all the actors (the dutch universities) and their (anonymous) edits on wikipedia in order to be able to say something about the amount and type of edits made. Will university 'profiles' emerge out of the Wikipedia data? This projects is also aiming to 'test' the wikiscanner as a research tool (can it get beyond 'scam research'?).Method

- Define a set of actors, in this case " dutch universities".

- Go to the wikiscanner and type in the actors.

- Define the timespan of edits to be discovered by the Wikiscanner.

- Get the list of results.

- Go to the TagCloudGenerator to transform list into cloud.

Findings

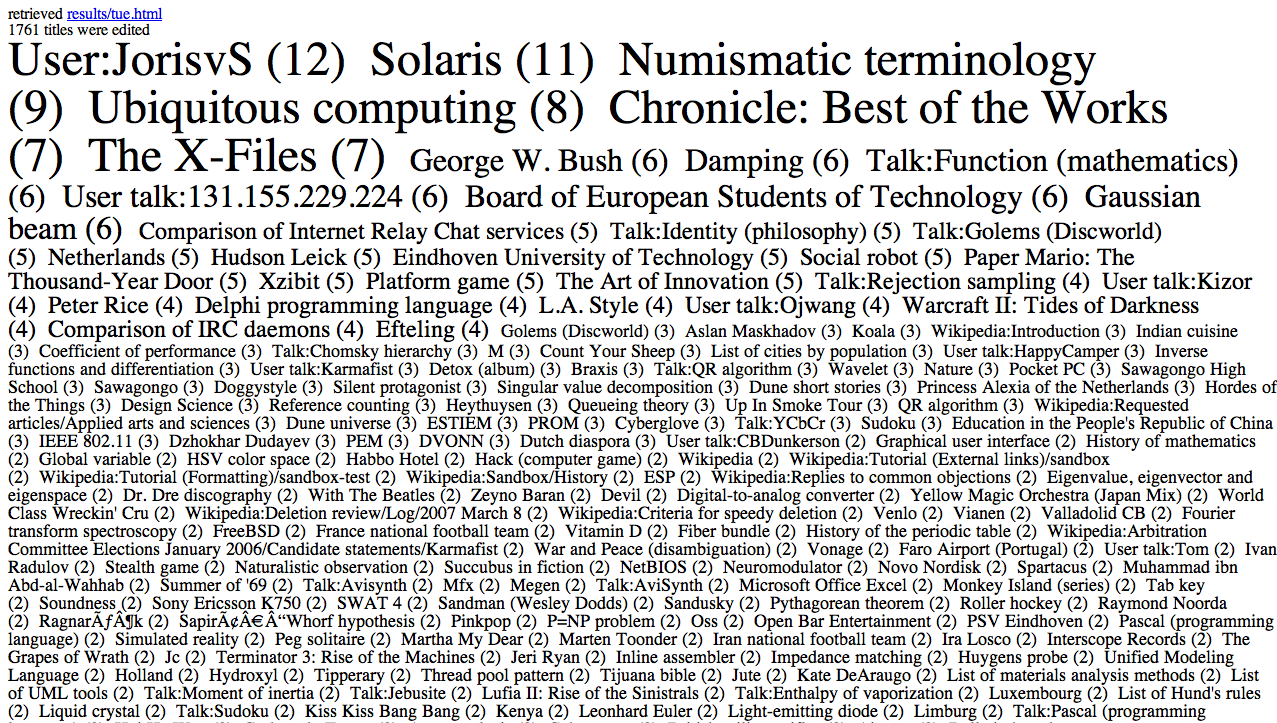

The findings are visualised in lists as well as tag-clouds, providing an overview of the amount and type of topic edited. The image below shows a tagcloud about the type of topics edited by a single university, in this case the Technical University of Eindhoven. For more visual results, go here: https://wiki.digitalmethods.net/Dmi/DutchUniversities#Overall_Activity

"While the question has mostly been, 'what can the Wikiscanner tell us about Dutch universities', the reverse is more interesting. What do the exercises carried out here say about the possible uses of the Wikiscanner for Wikipedia research?The Wikiscanner, with some tweaking, makes it possible to 'localize' Wikipedia activity by linking edits to specific institutions or within geographical borders. Such a move adds a dimension to studies of Wikipedia. Where these have had to hang on to notions of the 'virtual community' in describing the ins and outs of collaboration online, the kind of research hinted at here will make it possible to rethink this production as both a local and global operation." [Michael Stevenson, MoMblog, 07 October 2007]

For more visual results, go here: https://wiki.digitalmethods.net/Dmi/DutchUniversities#Overall_Activity

"While the question has mostly been, 'what can the Wikiscanner tell us about Dutch universities', the reverse is more interesting. What do the exercises carried out here say about the possible uses of the Wikiscanner for Wikipedia research?The Wikiscanner, with some tweaking, makes it possible to 'localize' Wikipedia activity by linking edits to specific institutions or within geographical borders. Such a move adds a dimension to studies of Wikipedia. Where these have had to hang on to notions of the 'virtual community' in describing the ins and outs of collaboration online, the kind of research hinted at here will make it possible to rethink this production as both a local and global operation." [Michael Stevenson, MoMblog, 07 October 2007]

The Dependency of Wikipedia on Bots

In the well-known video about the history of a Wikipedia entry (the heavy metal umlaut), the narrator, Jon Udell, is astounded at the speed by which a vandalized page is corrected by Wikipedia's users. A vandalized page is reverted to the previous version of the entry within a minute. "Wikipedians" appear extremely vigilant. An underinterrogated aspect of Wikipedians' vigilance are the robots, or bots -- software that often automatically monitors wikipedia pages for changes. When a change is made to a page, a bot will come calling, examining the change. Perhaps it will add a link to another wikipedia (one in a different language). Perhaps it will send an alert to a user, telling the wikipedian about a reversion or any other manner of activity. Thus changes to wikipedia pages trigger bot visits. Vigilance is often bot-driven.In this project we are interested in the reliance of wikipedia on bots in order for the collectively-authored, user-generated encyclopedia to maintain itself.

What is the degree of dependency of Wikipedia on bots?

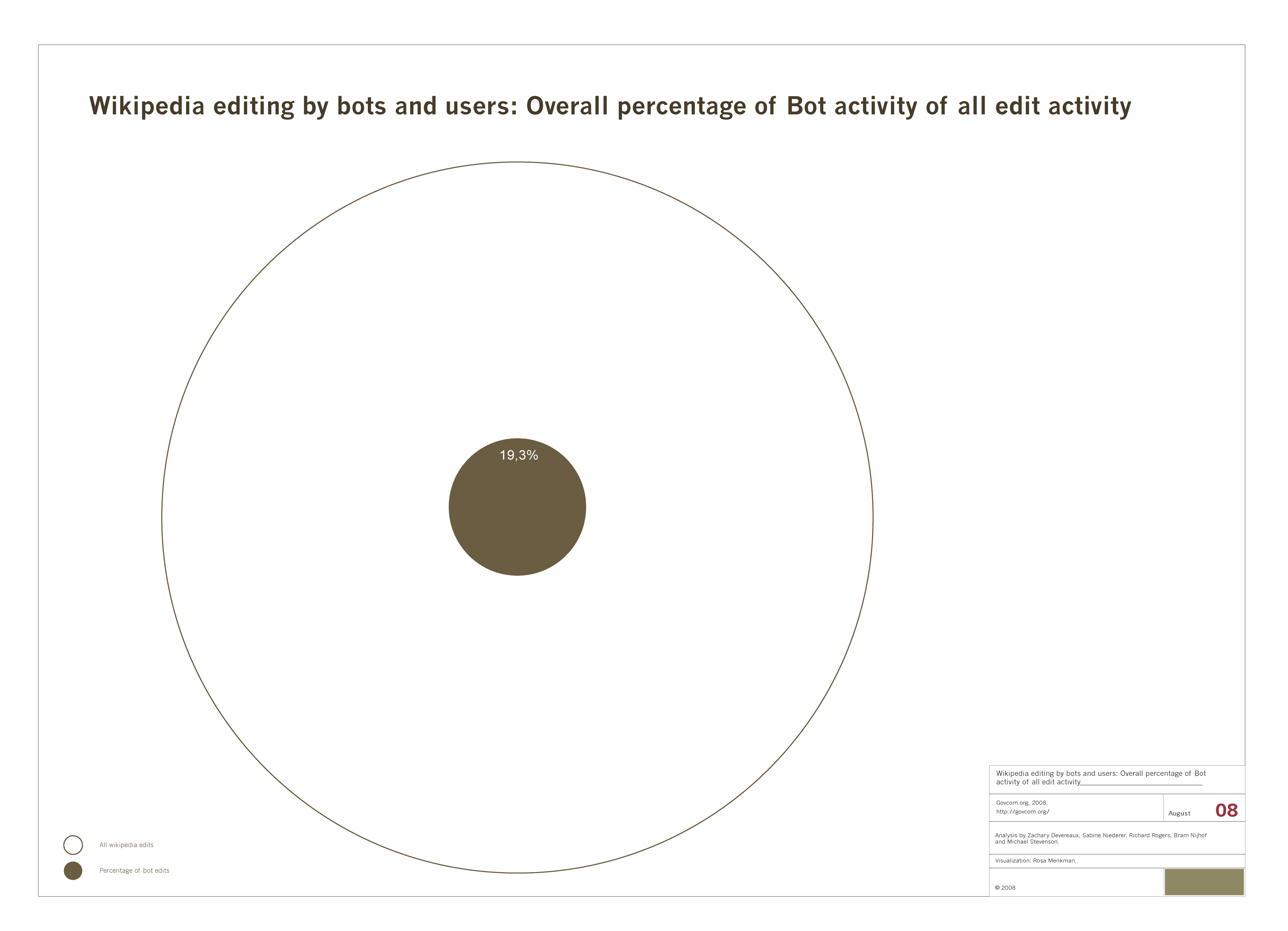

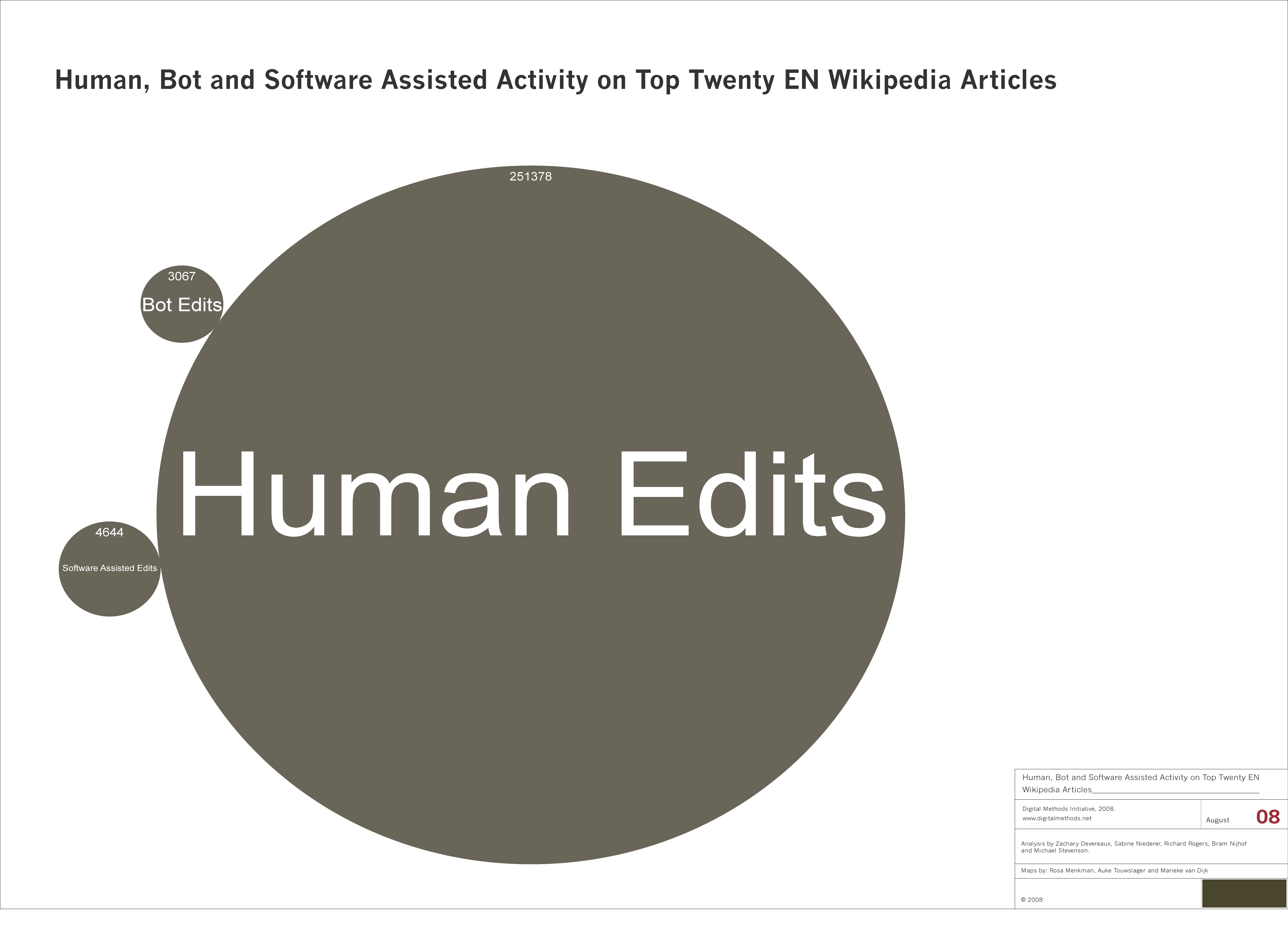

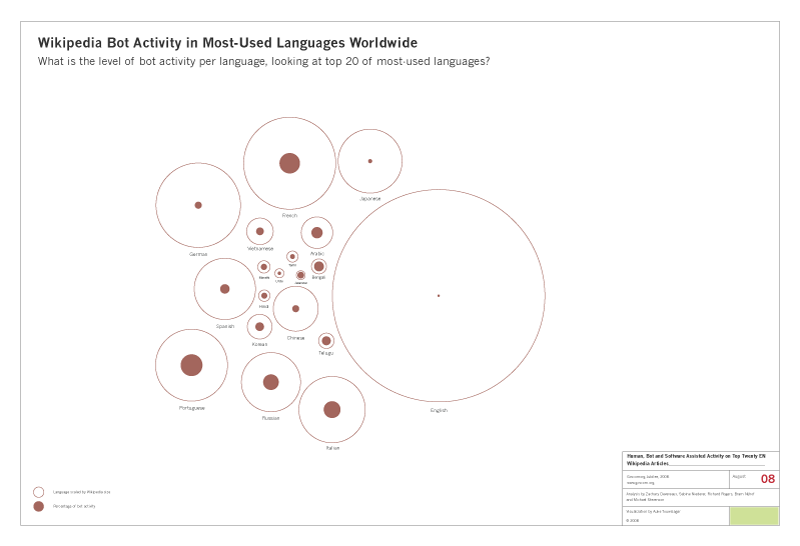

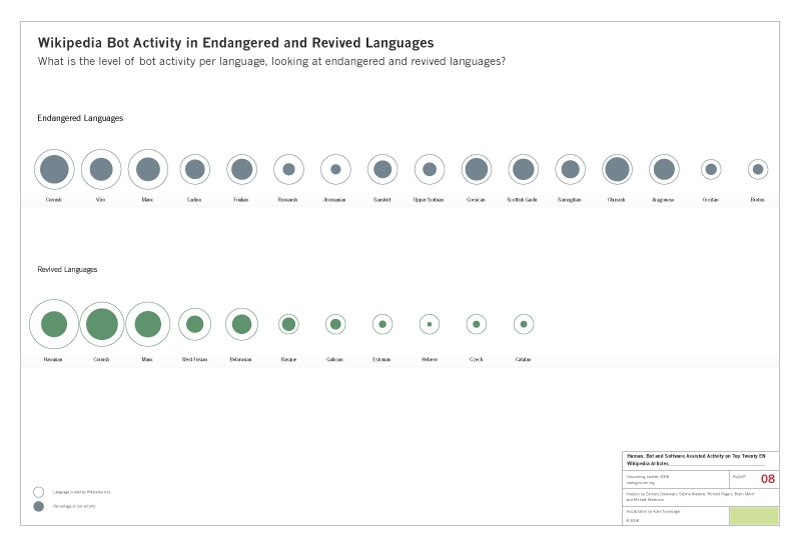

In order to answer this question, we looked into the amount of activity in wikipedia by human users and by bots (as well as by human users assisted by monitoring software). Remarkably, the most active wikipedians are bots, followed by human wikipedians, many of whom, presumably, use the wikipedia monitoring tools and are alerted when changes are made to wikipedia pages. See Thus, initially, we are interested in the overall picture. How many edits are made by humans, and how many by bots? According to Wikipedia bot activity data (which we later found to be incomplete), overall, 39% of all edits to all wikipedias (that is, to all the different language versions) are made by bots. Adding the English-language data (which was missing) we found that overall share of edits by bots in all wikipedias is 19%. Having noticed the great discrepancy between bot activity in English and the other language versions of Wikipedia, we became interested in the level of bot activity per language. There are currently 264 language versions of wikipedia. The English-language wikipedia is the largest in size, followed by the German in the number two position. Bot activity varies greatly by language version. One small wikipedia has seen 97% of its edits made by bots.

The graphics below show the findings with respect to the share of edits by bots versus humans, as well as per language. In the final graphic, we explore the radical idea of the dependency of endangered languages on bots. (It is not so much that the endangered languages rely on bots for their survival as much as it is that bot activity is remarkably high in endangered languages, suggesting not only few humans in those language spaces, which we already know. Rather, the bots are creating links and otherwise integrating the languages into the larger wikipedia undertaking.

Findings in the form of graphics

1. Wikipedia editing by bots and humans: Overall percentage of Bot activity of all edit activity 2. Shares of human, bot and software-assisted human edits in the top twenty most active Wikipedia entries in the English-language version.

2. Shares of human, bot and software-assisted human edits in the top twenty most active Wikipedia entries in the English-language version.

3. Share of Wikipedia bot activity in the twenty most used languages worldwide.

3. Share of Wikipedia bot activity in the twenty most used languages worldwide.  4. Wikipedia Bot Activity in Endangered and Revived Languages

4. Wikipedia Bot Activity in Endangered and Revived Languages

Exercise

Wikipedia Article History

The exercise is to show and make an account of a Wikipedia entry over time. One may begin by exploring a Wikipedia entry by using the History Flow Visualization Application. Instructions of use are here, https://wiki.digitalmethods.net/Dmi/HistoryFlowHowTo

Create a narrated video of the history of a Wikipedia entry. An example: http://weblog.infoworld.com/udell/gems/umlaut.html. (The video is made using screencast software.) The exercise is to show and make an account of a Wikipedia entry over time.

The exercise is to show and make an account of a Wikipedia entry over time. One may begin by exploring a Wikipedia entry by using the History Flow Visualization Application. Instructions of use are here, https://wiki.digitalmethods.net/Dmi/HistoryFlowHowTo

Create a narrated video of the history of a Wikipedia entry. An example: http://weblog.infoworld.com/udell/gems/umlaut.html. (The video is made using screencast software.) The exercise is to show and make an account of a Wikipedia entry over time. To do so:

- Choose a Wikipedia entry.

- Peruse the 'change log' for an idea of the activity on the topic.

- Choose interesting dates, or extremes in activity.

- Screen capture the changes.

- What story do the changes tell?

Edit | Attach | Print version | History: r20 < r19 < r18 < r17 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r20 - 06 Jan 2016, ErikBorra

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback